SignSavvy

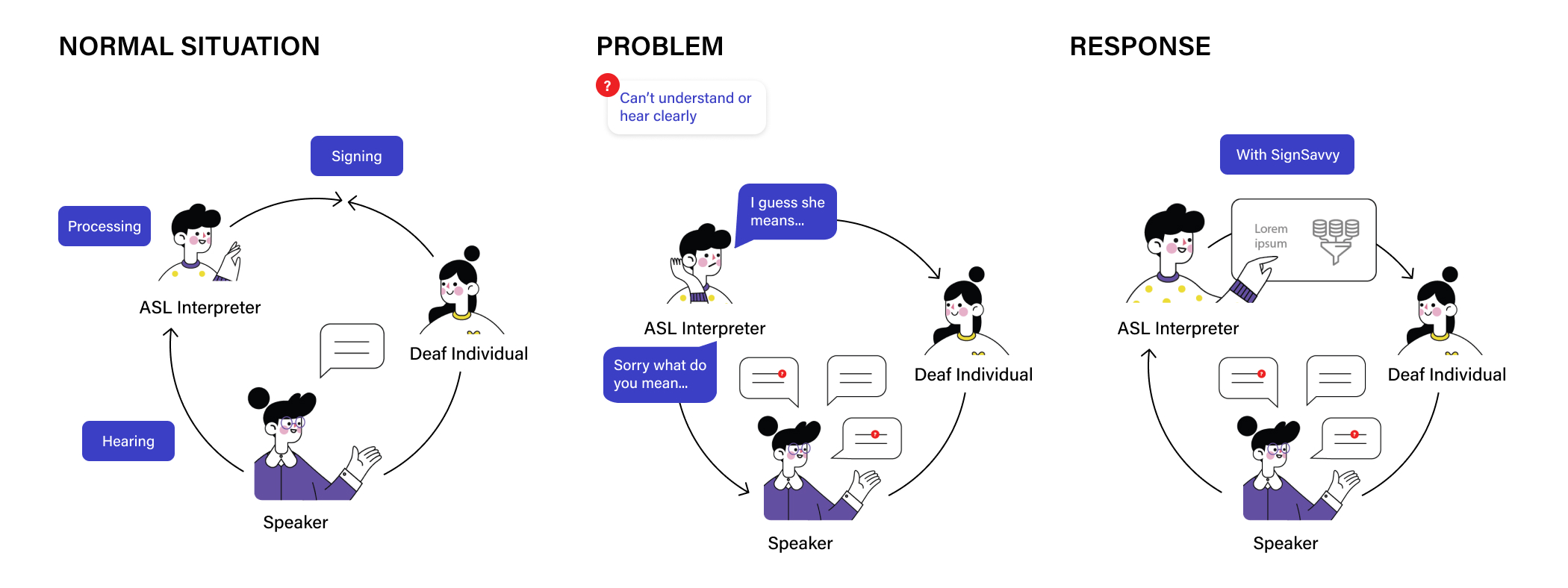

An app that assists novice ASL interpreters with imagery and text during simultaneous interpretation

Case Study

UX/UI

Illustrator

Photoshop

Lots of sticky notes

Winnie Chen

Data Synthesis

Ideation & Design

Concept & Usability Testing

Photography

UX & UI Design

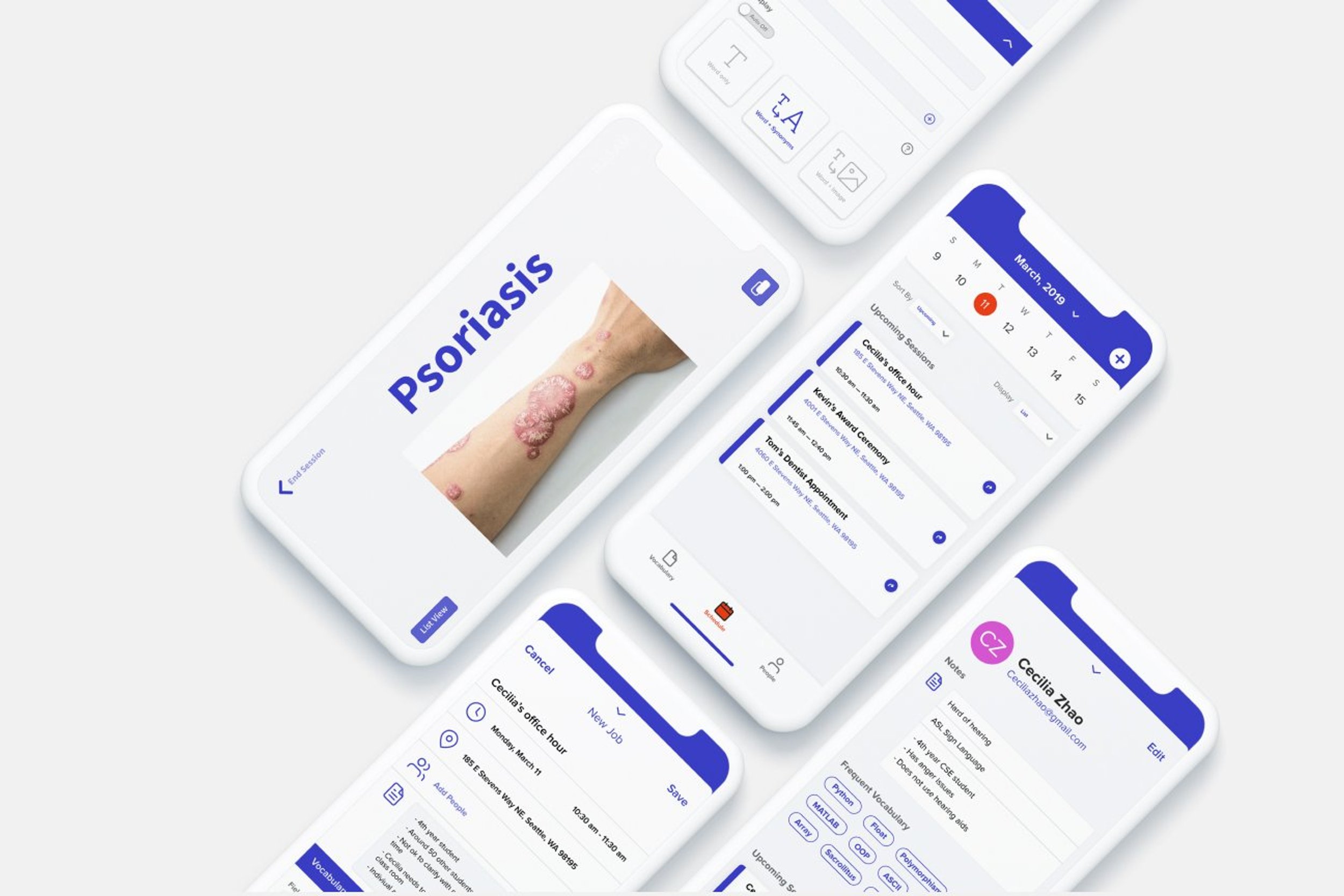

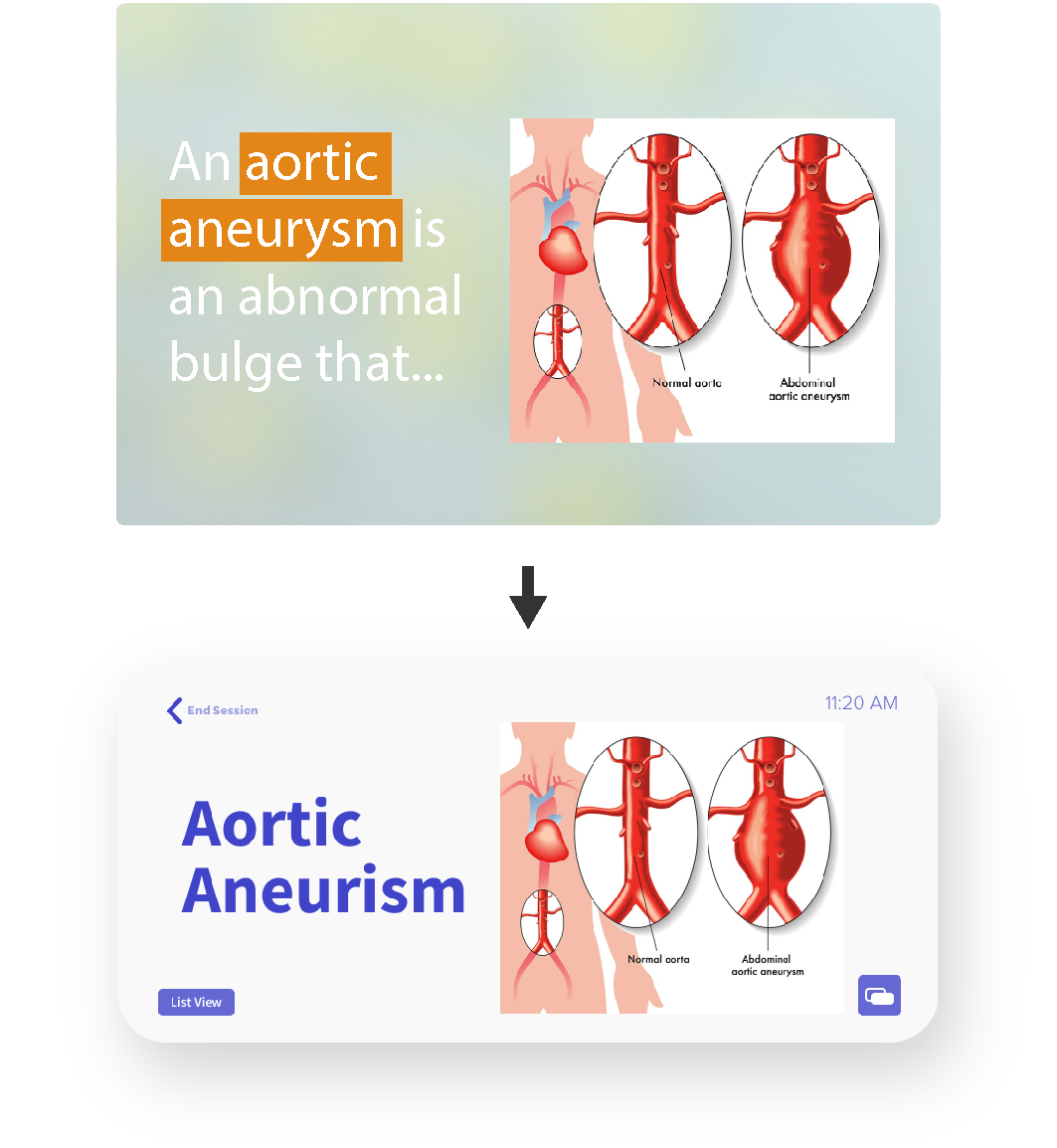

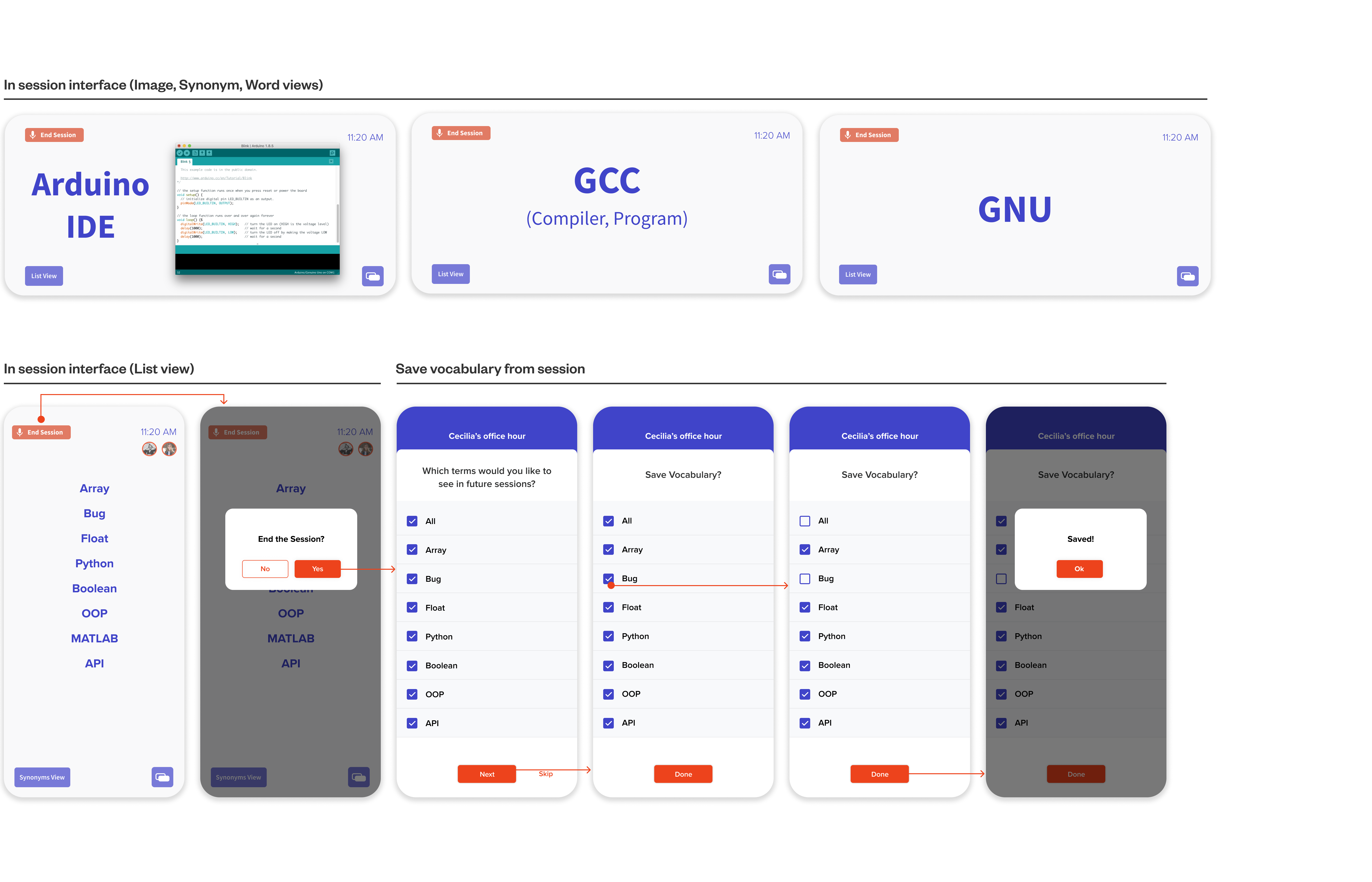

REAL-TIME ASSISTANCE

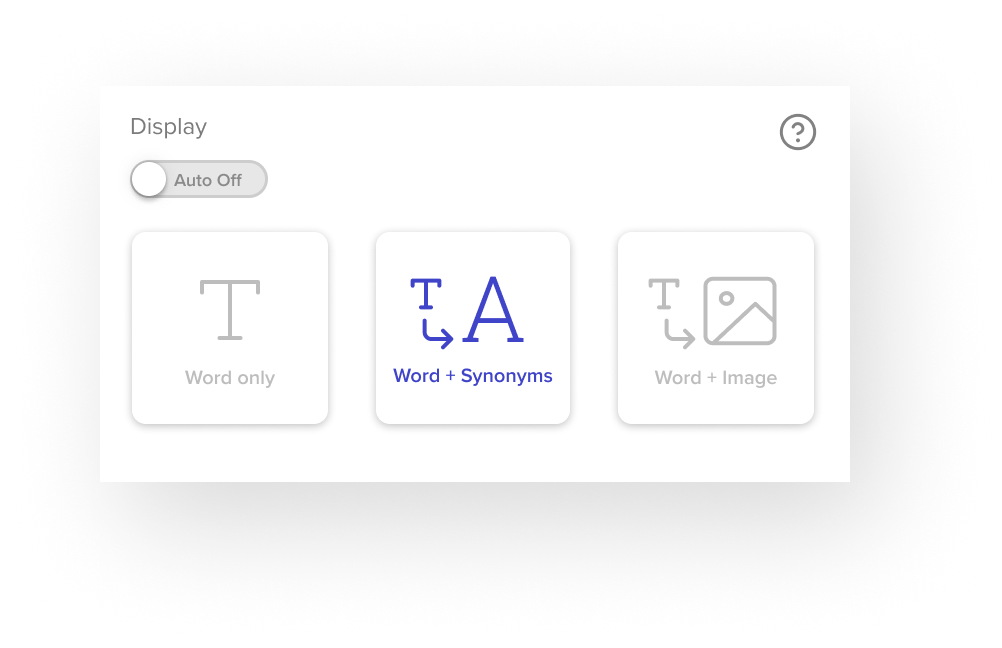

When difficult or technical vocabulary is used during a simultaneous interpretation session, Signsavvy will display the word with corresponding synonyms or an image to help interpreters quickly understand in context to the conversation.

Not only would this help the interpreters if they mishear, but also allows them to see the spelling if they need to fingerspell.

Why Interpreters?

Interpreters are a vital part of accessibility and inclusion for deaf and hard of hearing individuals. However, interpretation is a very stressful job requiring a lot of experience, knowledge, and energy. It is especially difficult for novice interpreters who start out interpreting an unfamiliar topic with terms they do not know. Because of this, there are very few interpreters despite the high demand.

Initial Research

Research Questions

As we defined our users, we wanted to scope out our problem space with research questions. After doing secondary research on the role of interpreters and deaf culture, we wondered…

Participants

We reached out to our school's Disability Services Office in order to get contact info and mass cold email interpreters and experts in the area. Our participants were happy to help despite their busy schedules.

Research Methods (Discover)

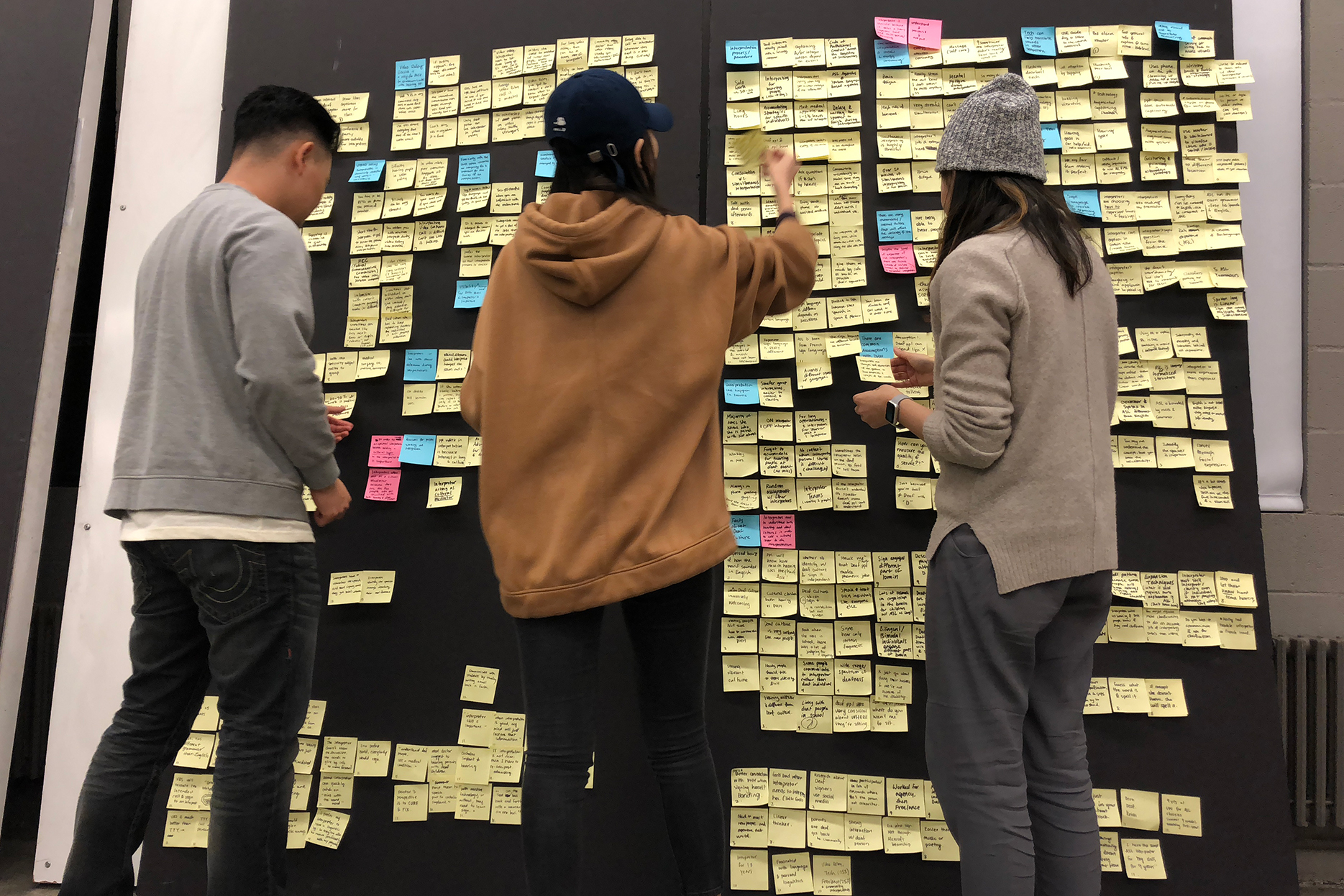

Data Synthesis (Define)

Key Insights

Design Principles

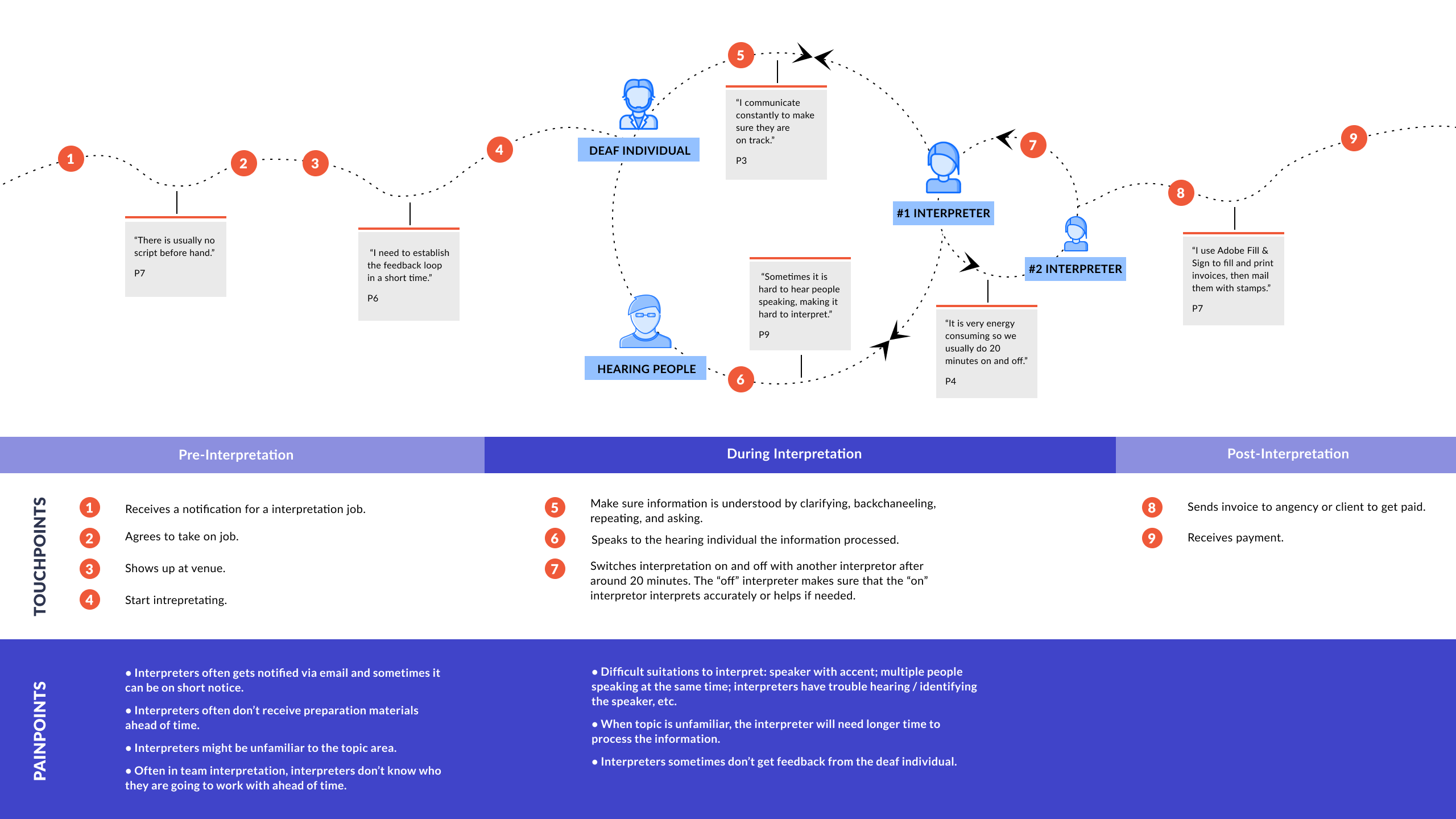

How might we assist interpreters by equipping them with information they need for their interpretation sessions to offset their cognitive load?

Ideation

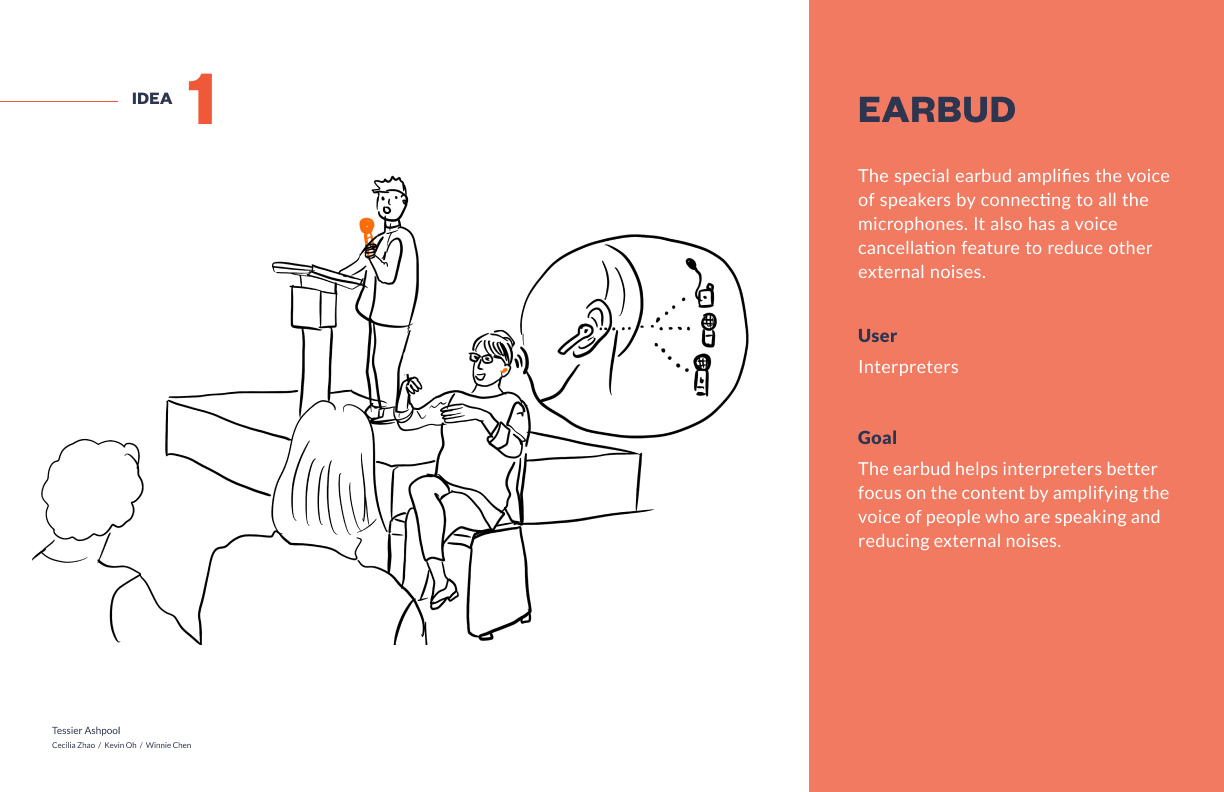

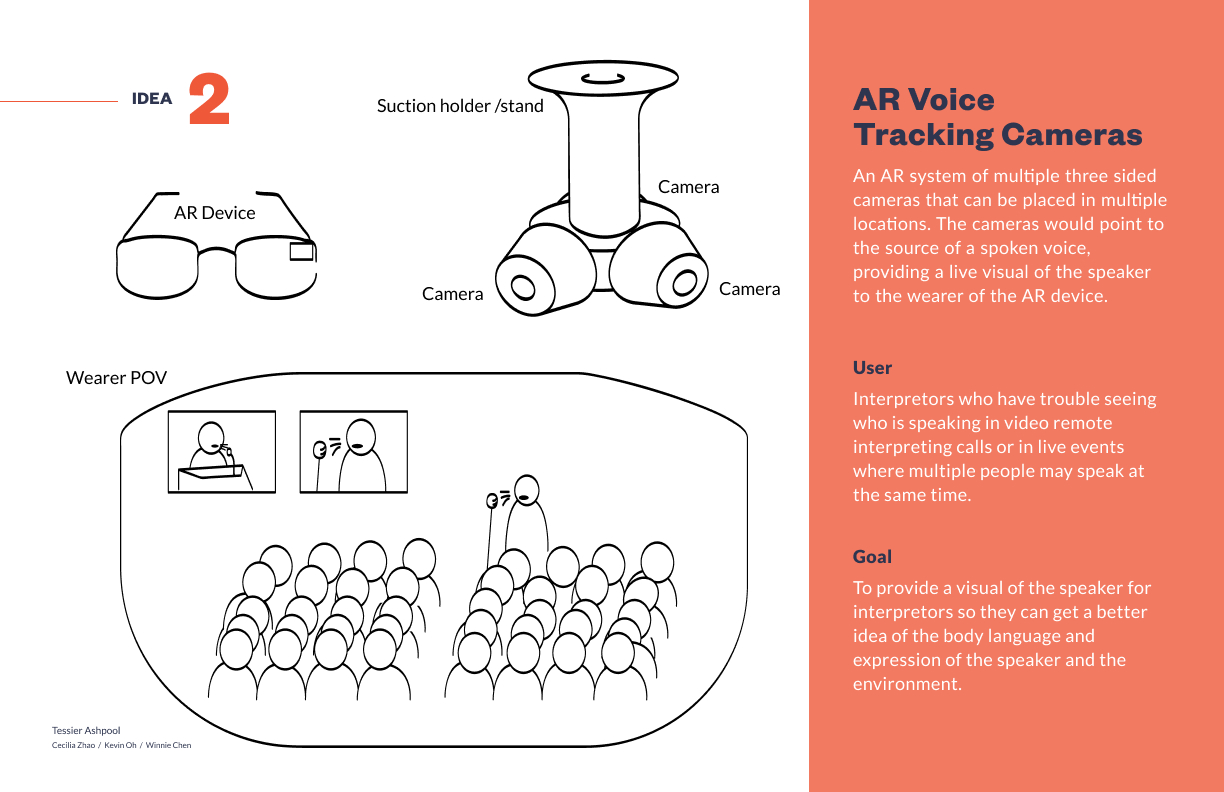

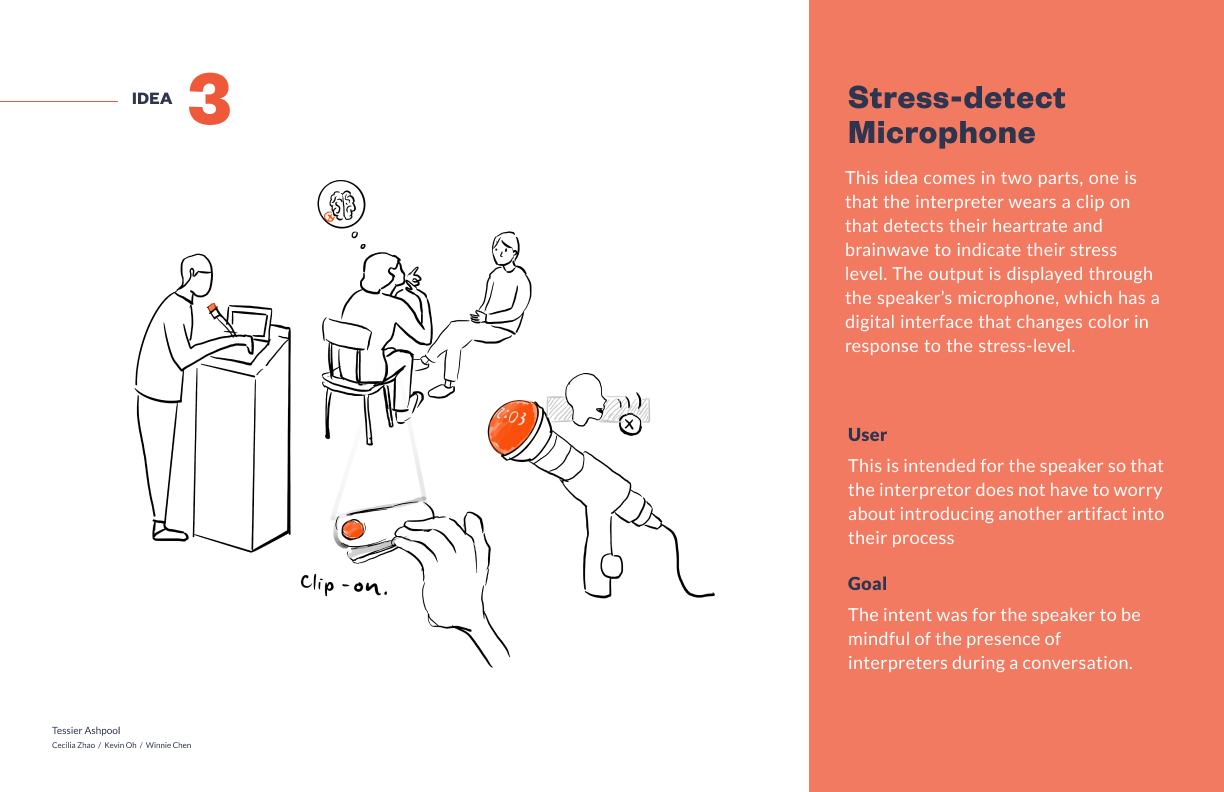

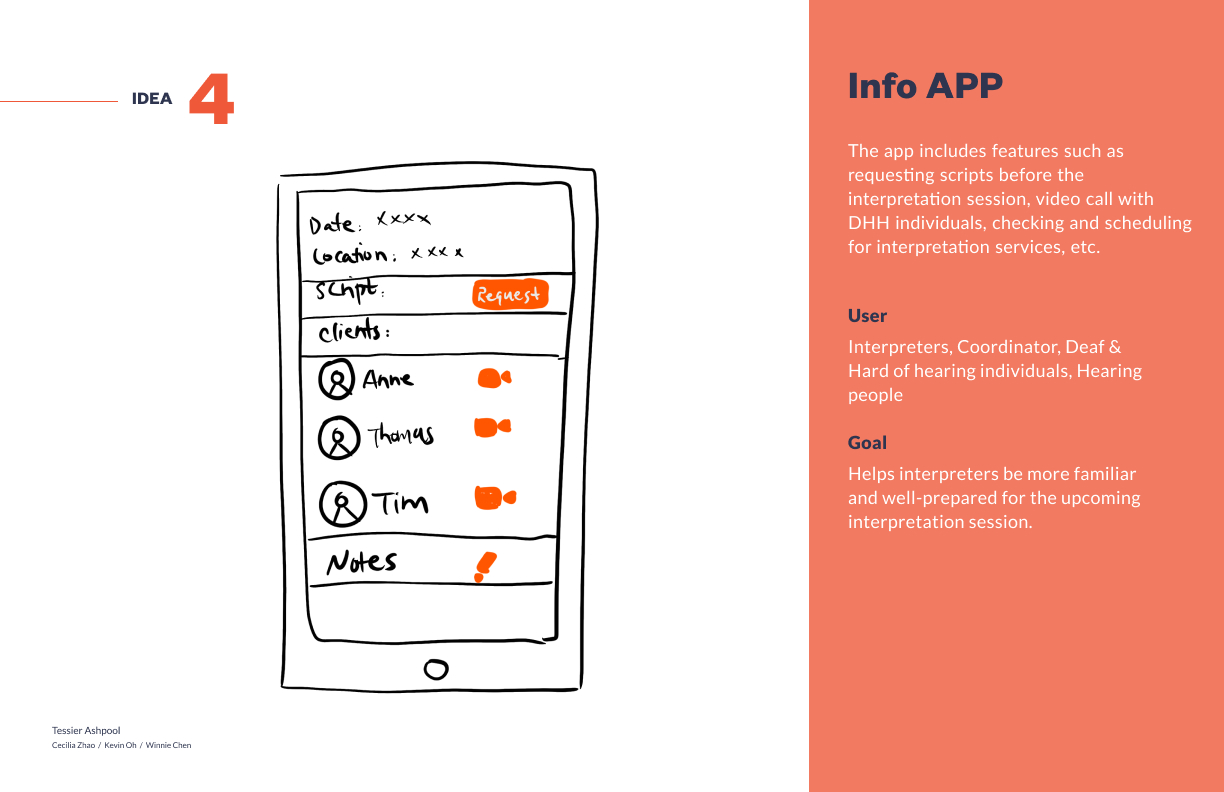

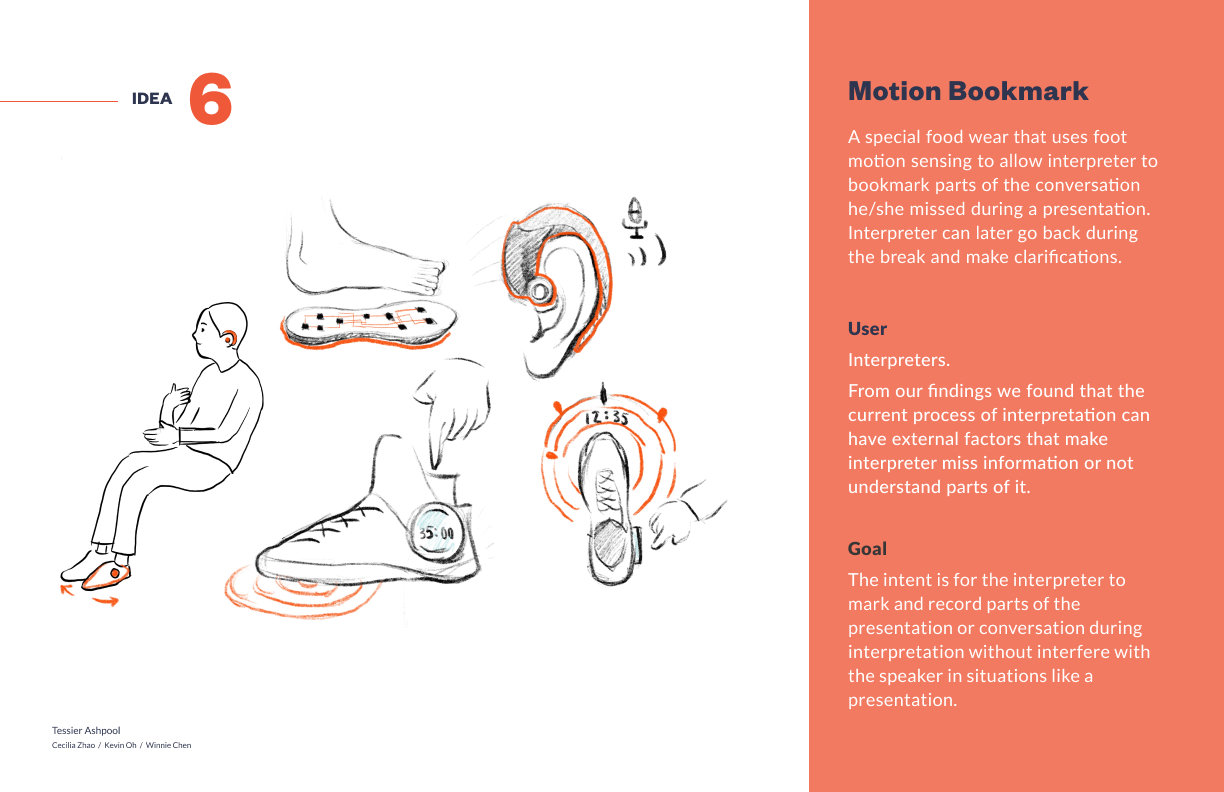

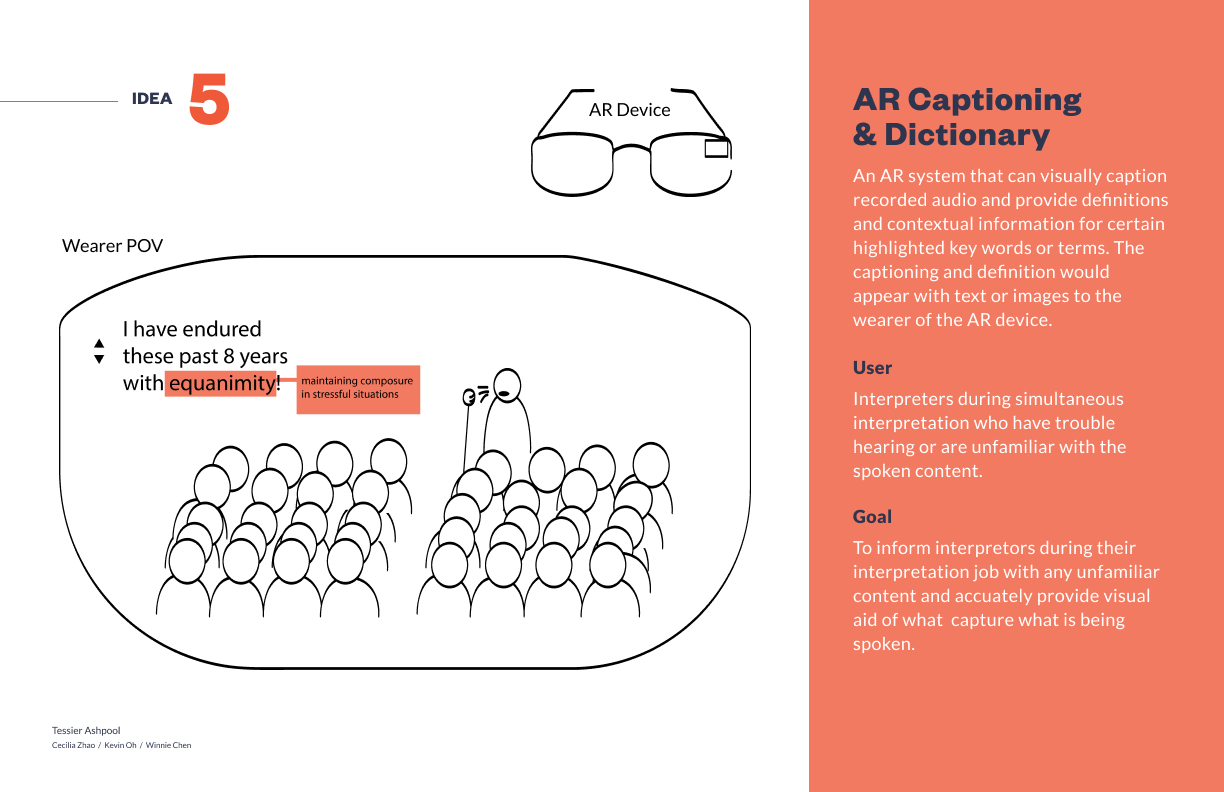

After doing several ideation sprints our team narrowed down to six ideas. Of those six, we ultimately decided to explore idea 5 because we thought it was a nonintrusive intervention that was the closest to align with our principles and our goal of assisting interpreters despite the speculative nature of the AR and AI technology.

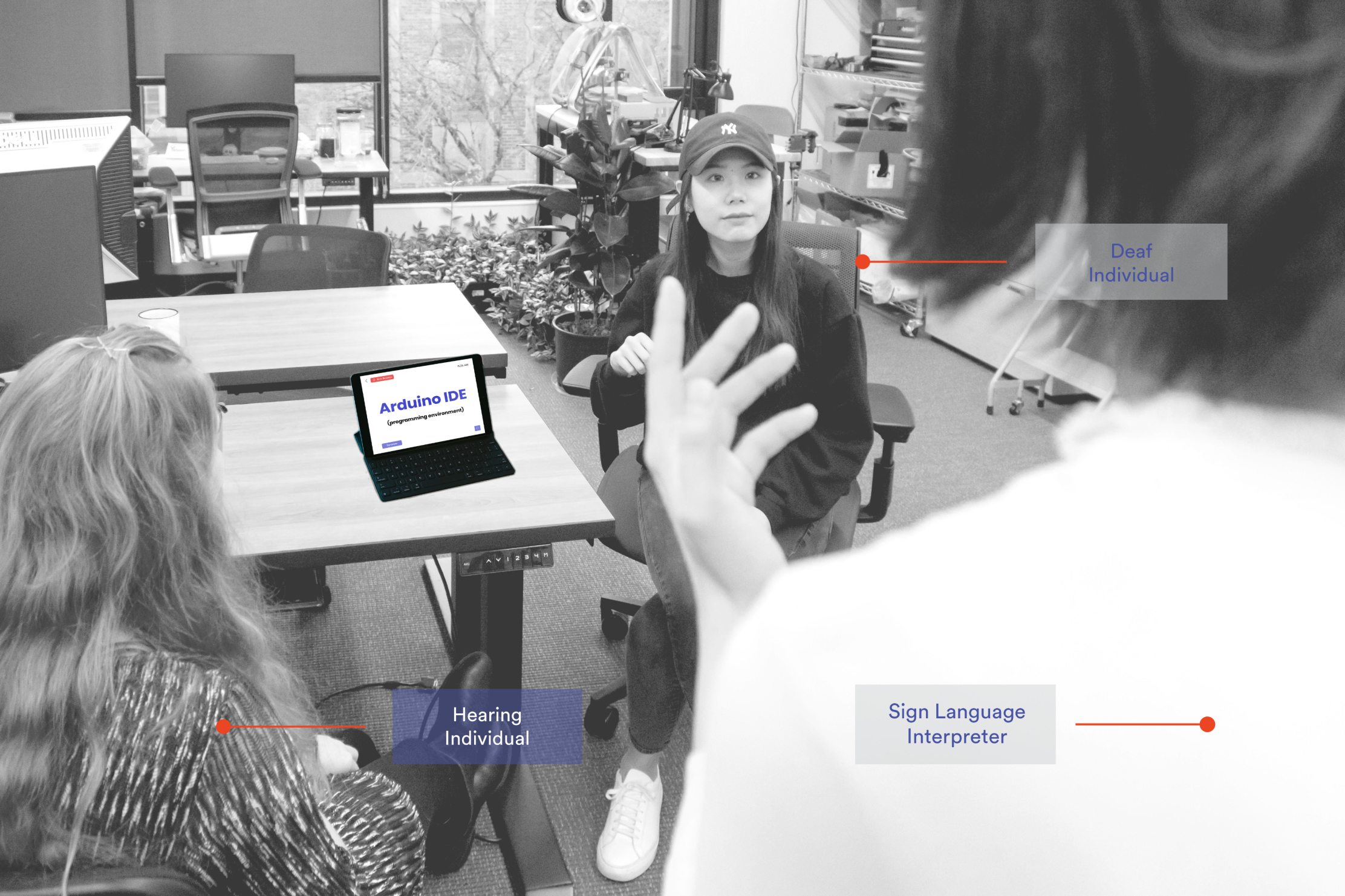

Concept Testing 1

We tested three different variations of our idea 5 through the wizard-of-oz method and role-playing an interpretation session to simulate what interpreters would see when using this AR technology. We asked them about what they thought of each variation at the end.

Goal:

To observe how interpreters respond to each variation and gauge how helpful the visual aids are.

Participants

What we learned

How we responded

1

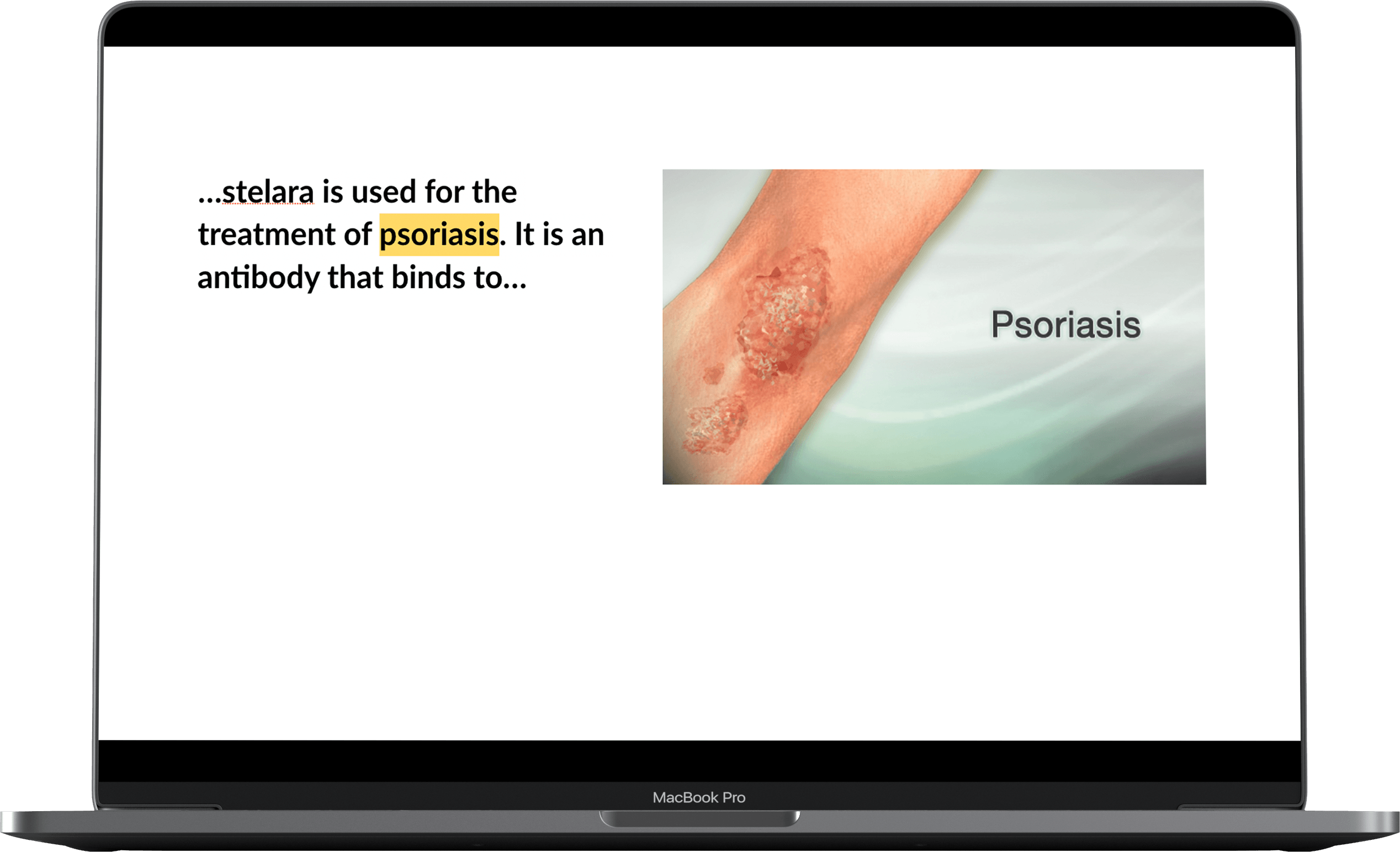

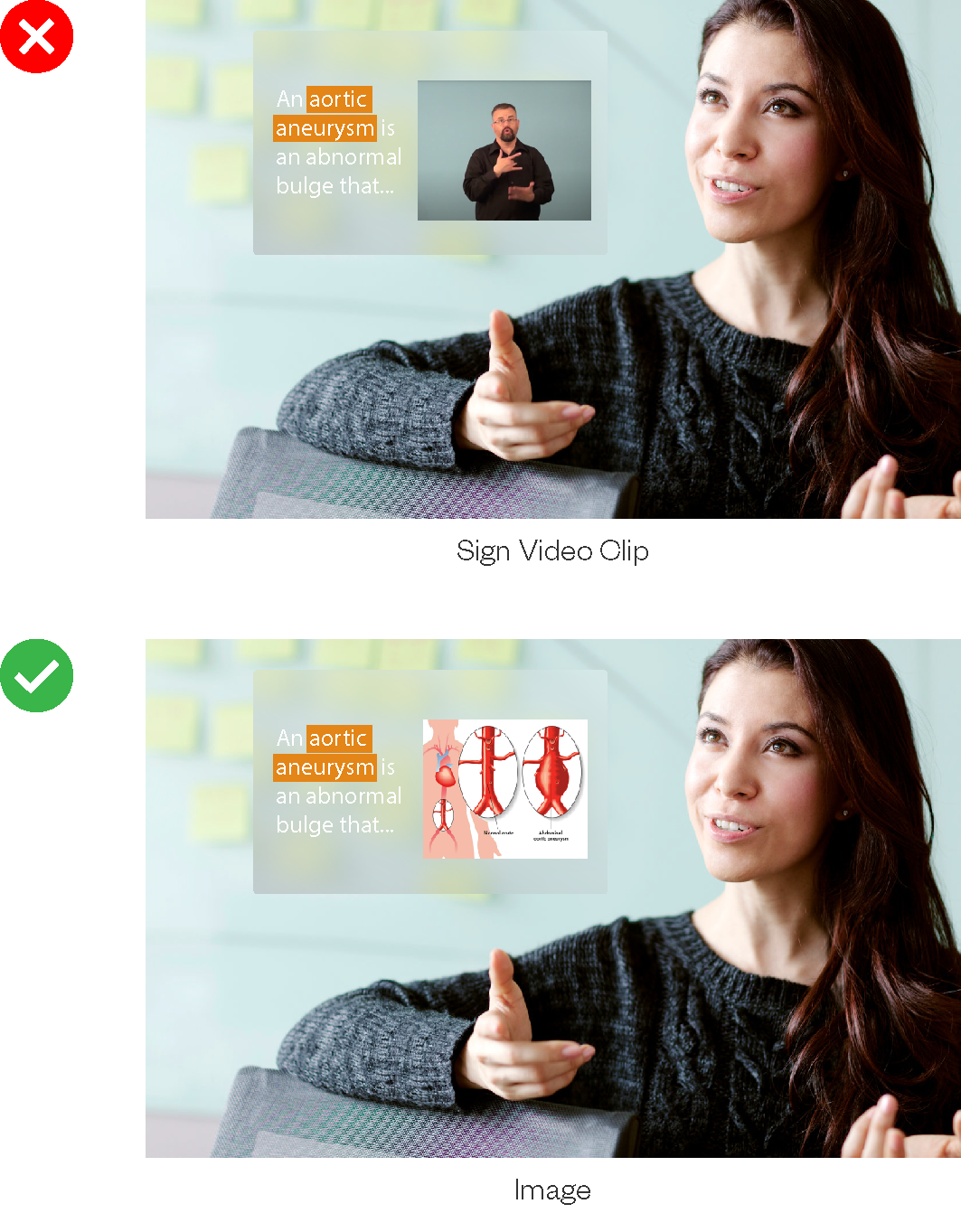

We quickly found out that the video definition clips were useless because they were visually distracting, often inaccurate, and almost impossible to mimic.

However, images could quickly communicate meaning correlating to the highlighted term at a glance.

2

Fingerspelling occurs often in ASL since a lot of technical terms don't have a sign developed by the deaf community. Seeing the spelling helps the interpreter know how to fingerspell that term to the DHH individual.

It is also helpful in scenarios where interpreters don’t know the term or if they couldn’t hear or understand what was spoken.

3

Interpreters have a code of ethics to maintain the privacy of the user or patient.

-P3

3

Concept Testing 2

After the first rounds of testing, we created a video storyboard of our speculative AR concept and presented it to our participants. This was the first time participants got to see our design.

Goal:

We wanted to know if this technology would be something interpreters can see themselves using in the future and what people who use interpreters think.

Participants

What we learned

How we responded

1

While our AR concept was fascinating to our participants, they couldn't really imagine using AR technology especially without a real working device or prototype. Therefore, it was difficult to evaluate.

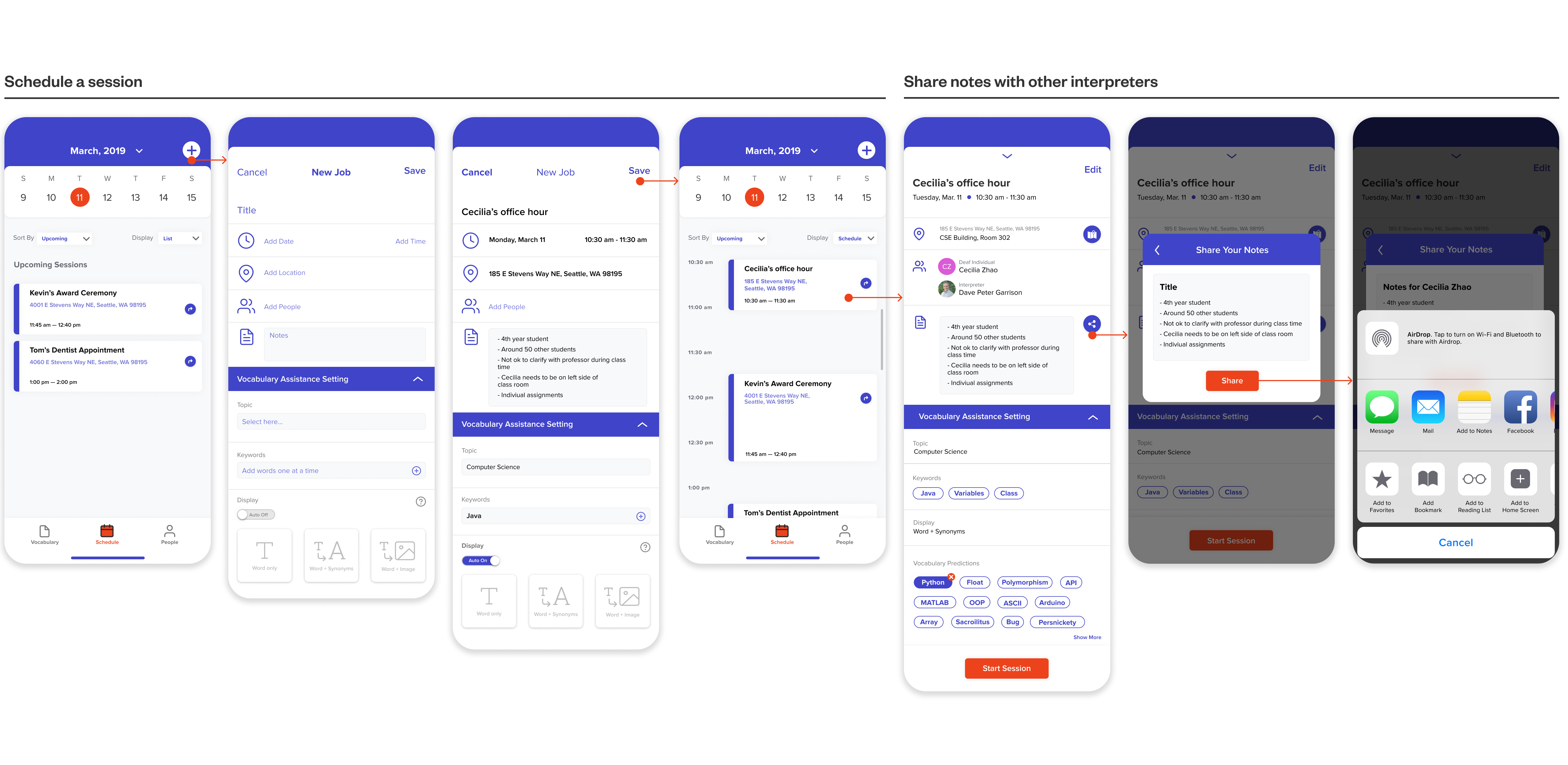

However, an app could also easily fit in with their workflows since interpreters are already using their phones for their jobs.

2

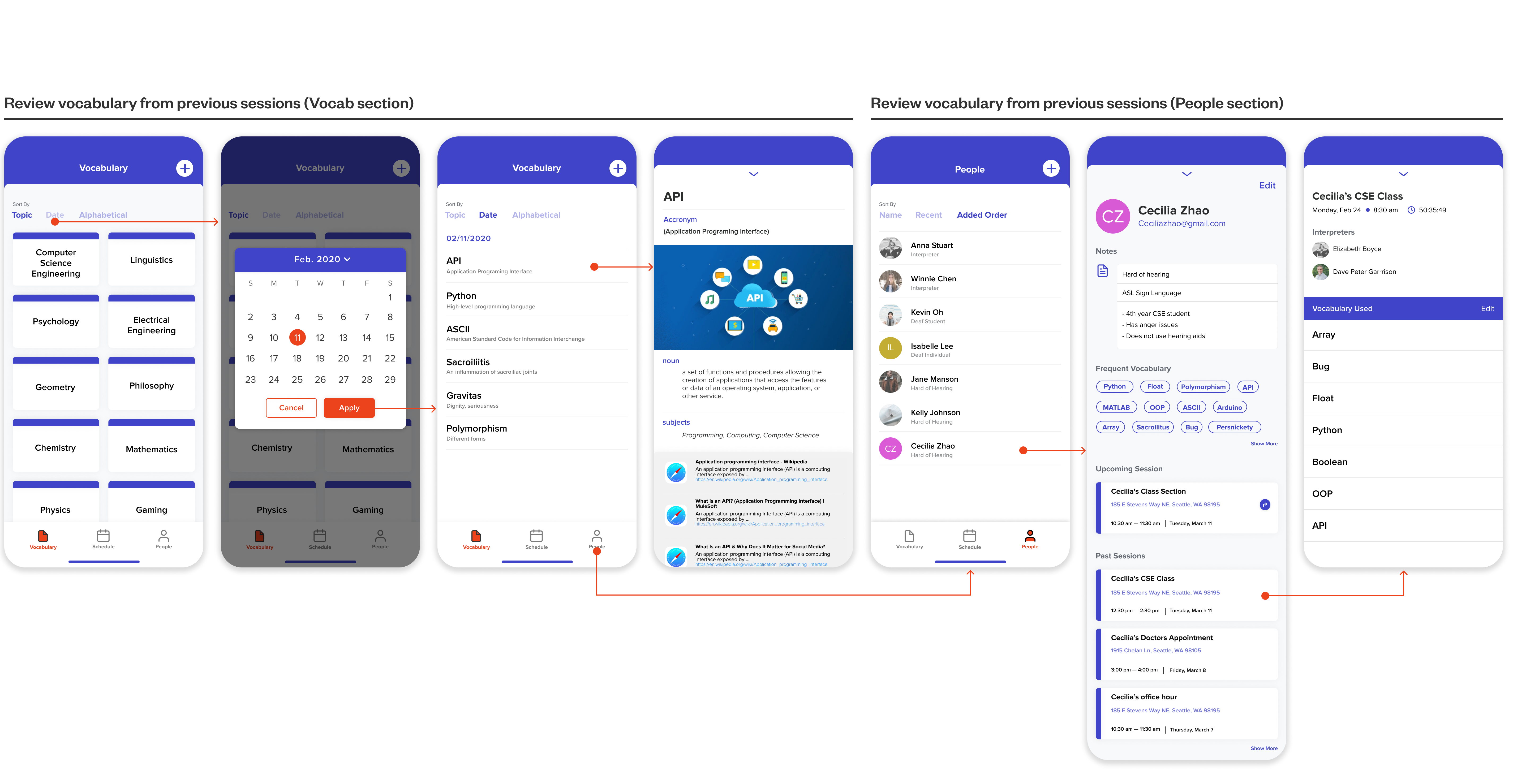

Interpreters reiterated that preparation for upcoming sessions is an important process and a part of that was to become familiarized with the topic.

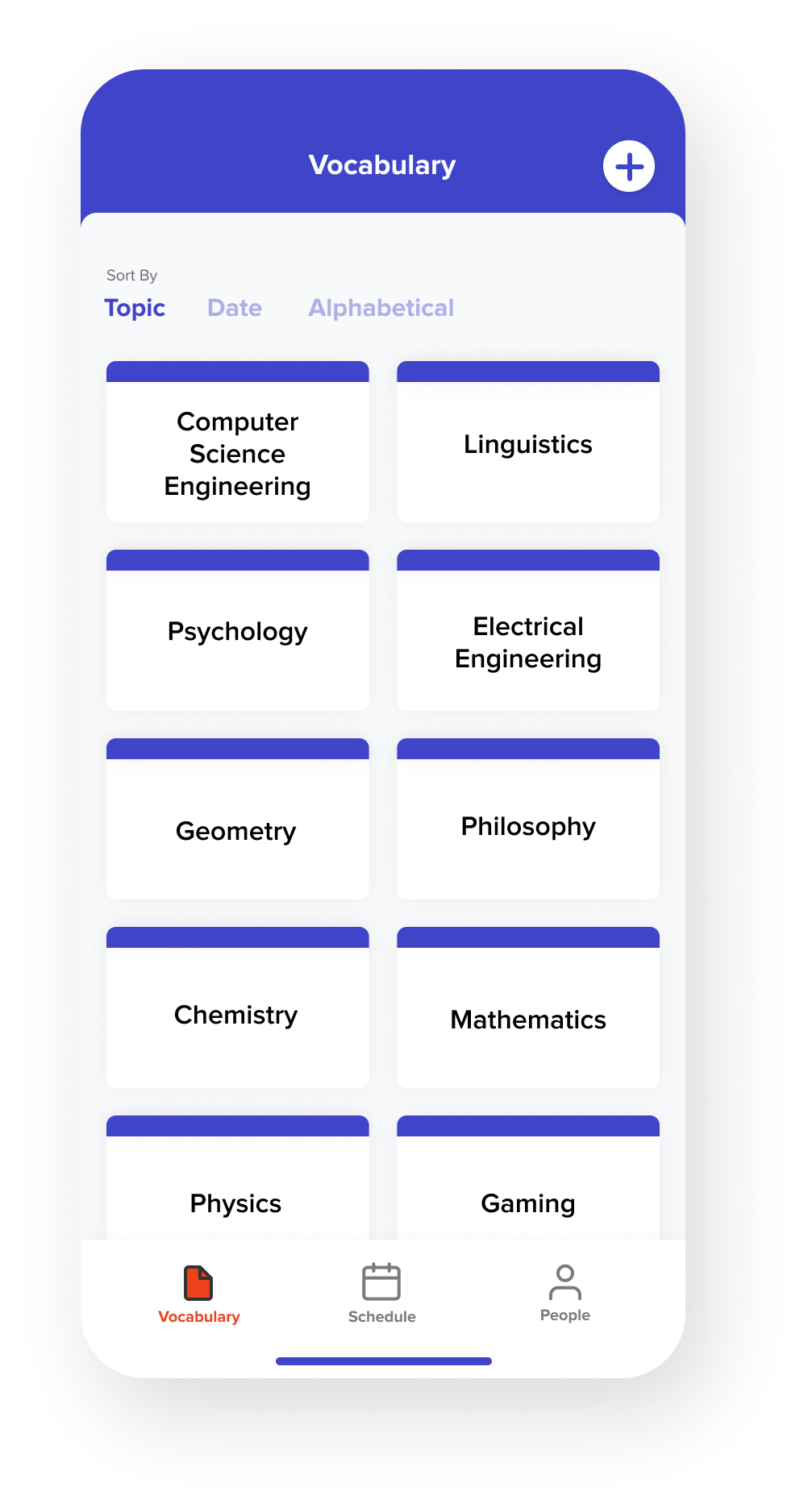

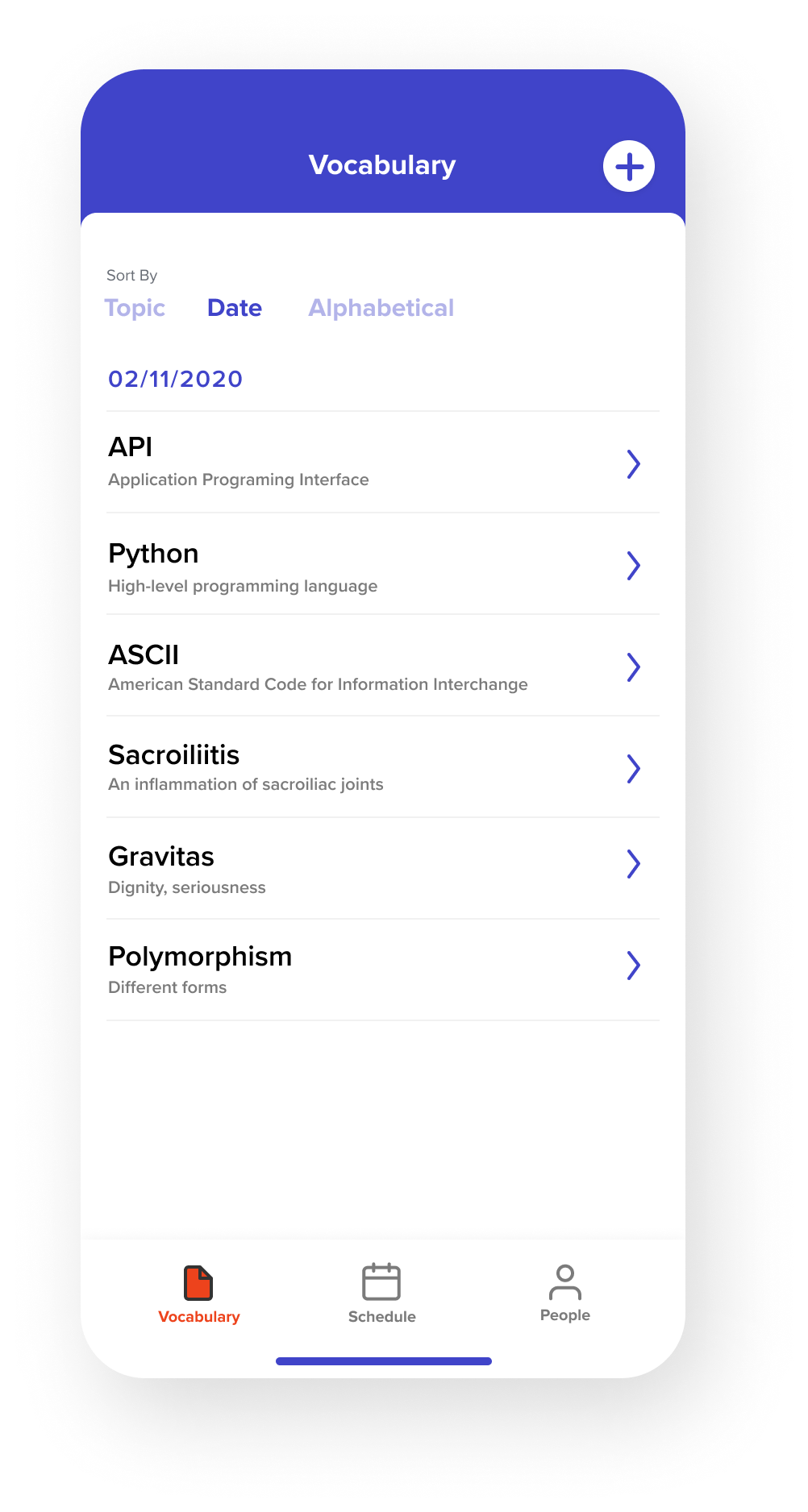

Luckily, because our concept shifted onto an app, we could implement their procedures before and even after their sessions such as learning specialized vocabulary.

4

DHH people are often already excluded from information that is accessible to others. Also, just as it is difficult for interpreters to constantly finger-spell, it is also difficult for the DHH individual to constantly read fingerspelling.

4

Screen mirroring would allow DHH individuals to process the information quicker and feel included in the process. It could also reduce the need for fingerspelling since the interpreter can just point to the device each time there is technical vocabulary.

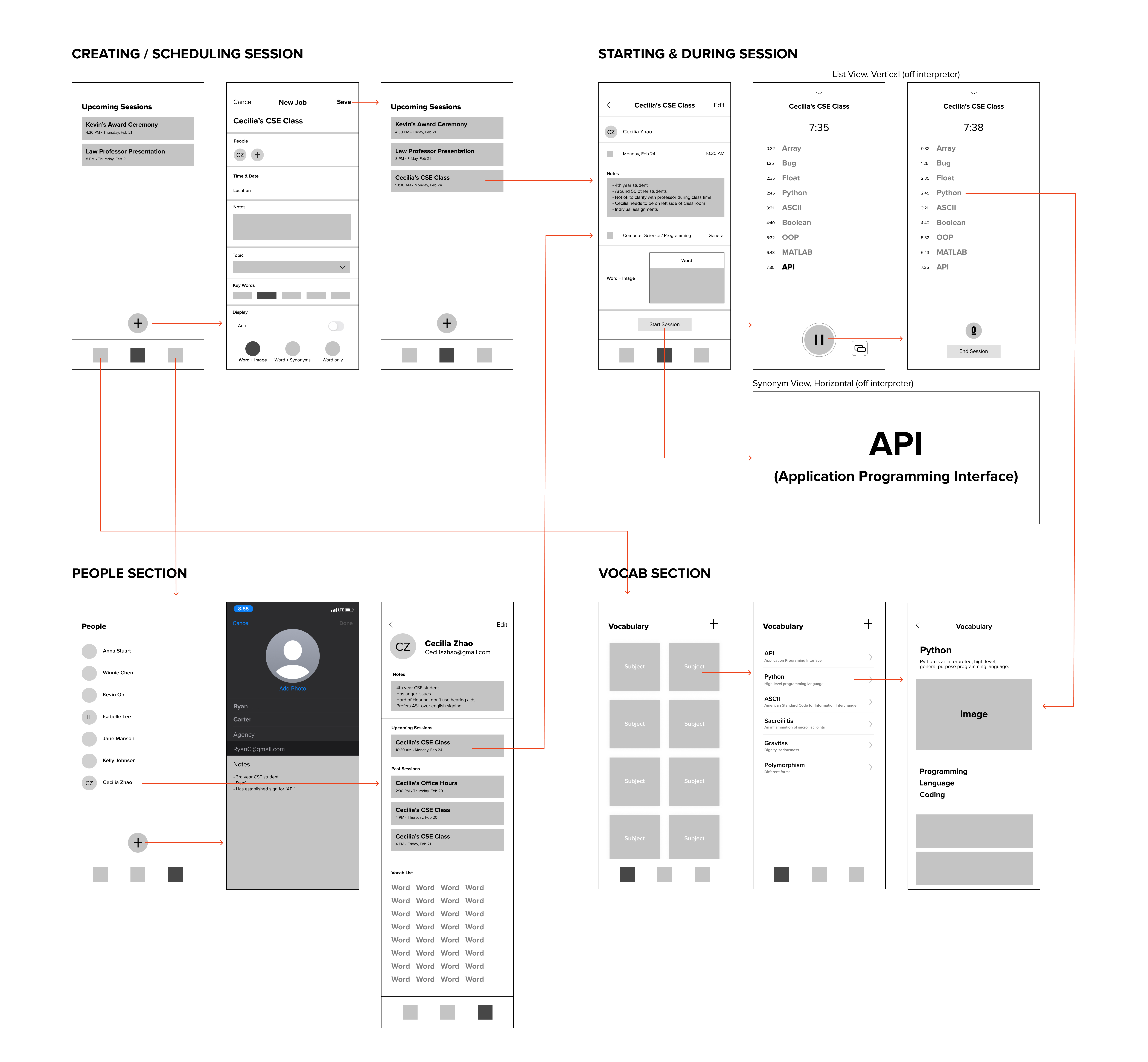

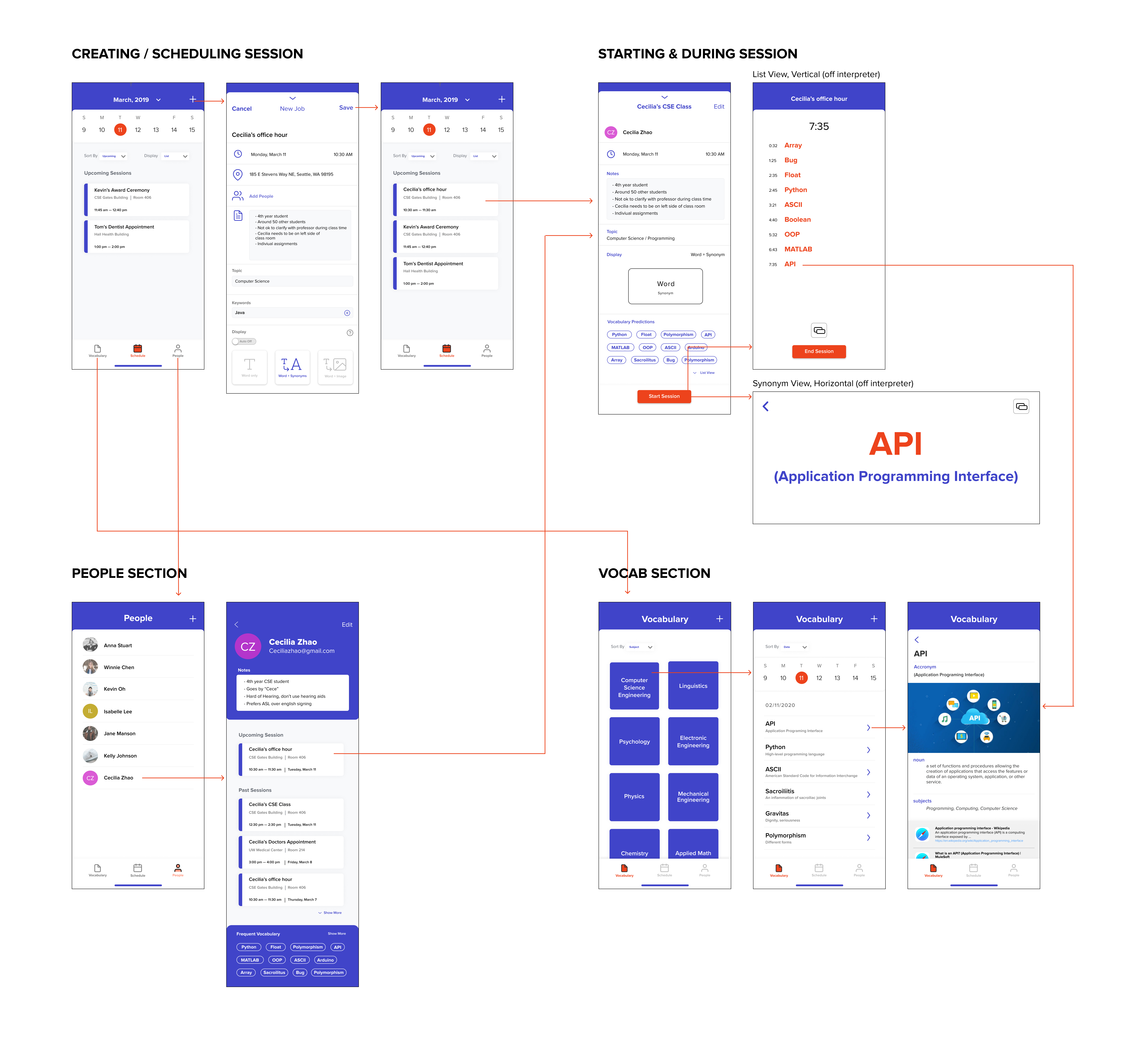

UX & UI Design

While we were gathering and synthesizing our learnings from Concept Testing 2, we were simultaneously designing the user experience and the interfaces.

Usability Testing

After designing interfaces and an interactive prototype on Figma, we conducted usability testing with two new participants. They were thrilled and impressed by the idea of a product designed for interpreters since they are often overlooked when it comes to inclusive technology.

Goals:

- See if the UI would communicate its functionality to an unknowing user

- See if the app's structure is intuitively navigable

- Get feedback on the existing features

- Validate the need for this product within their current workflows

Participants

What we learned

How we responded

1

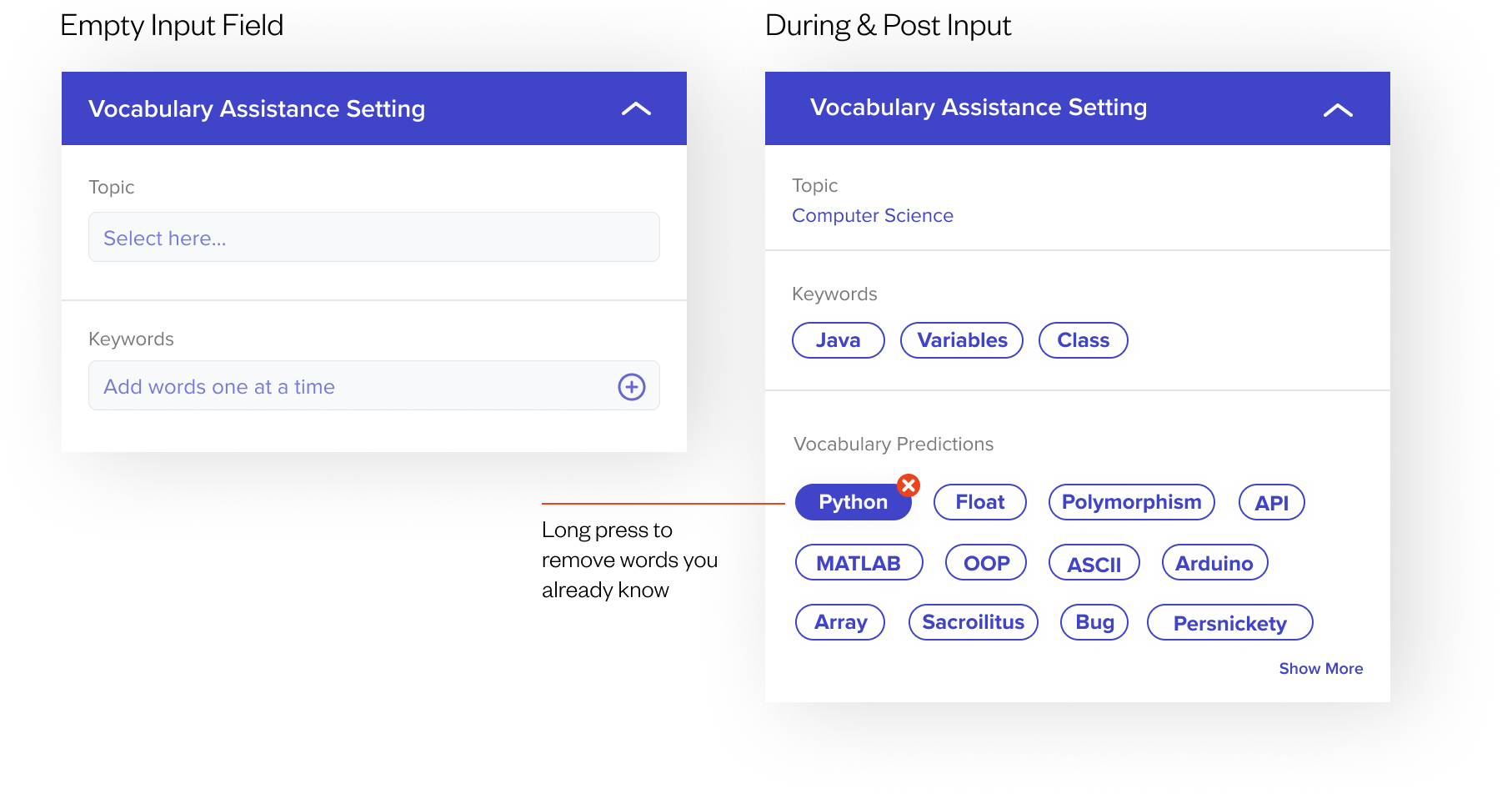

The terms that are highlighted should be terms that the interpreter does not know. Because knowledge levels are different, we had to personalize the experience depending on interpreters' familiarity with various topics and words.

1

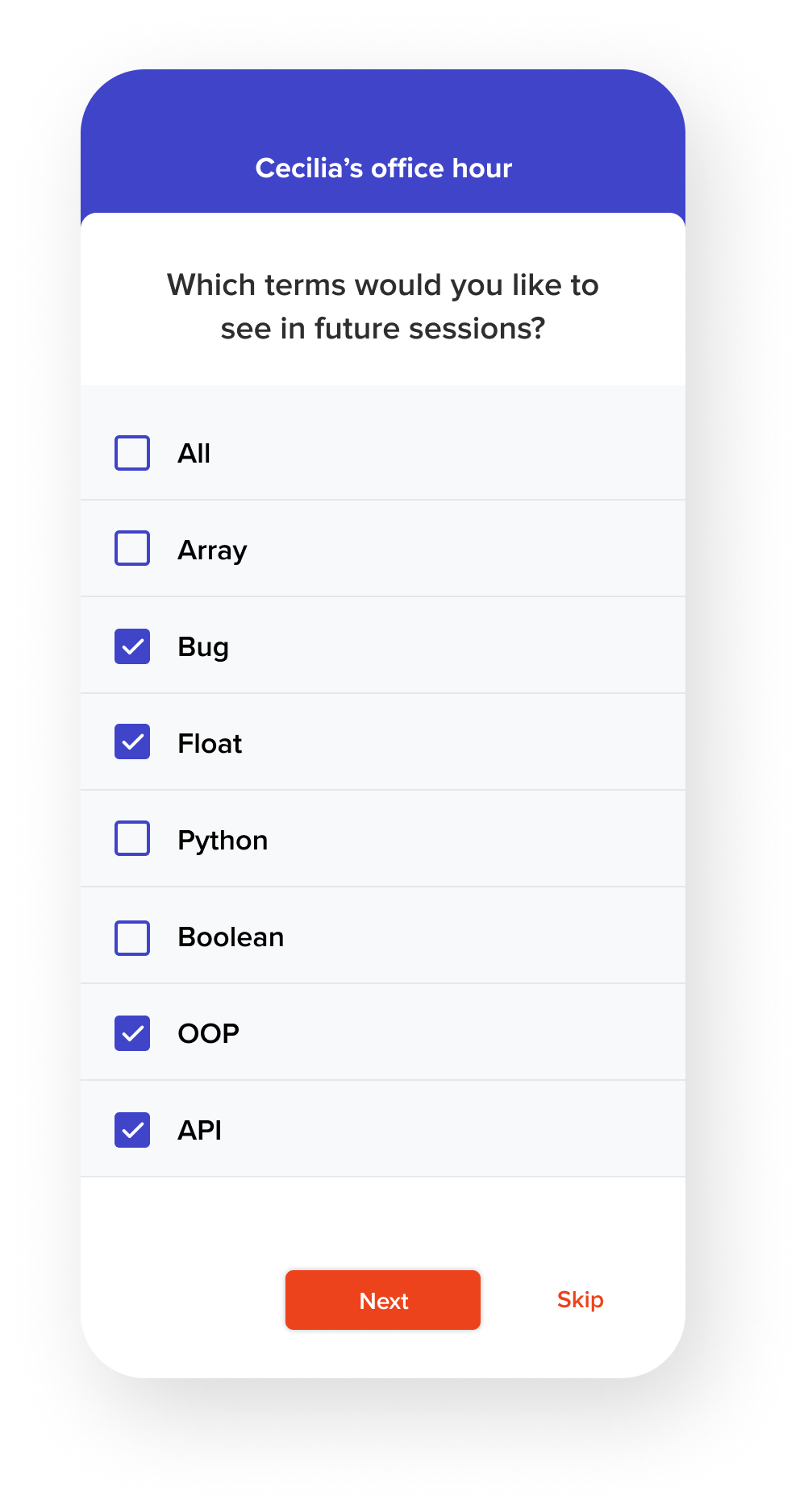

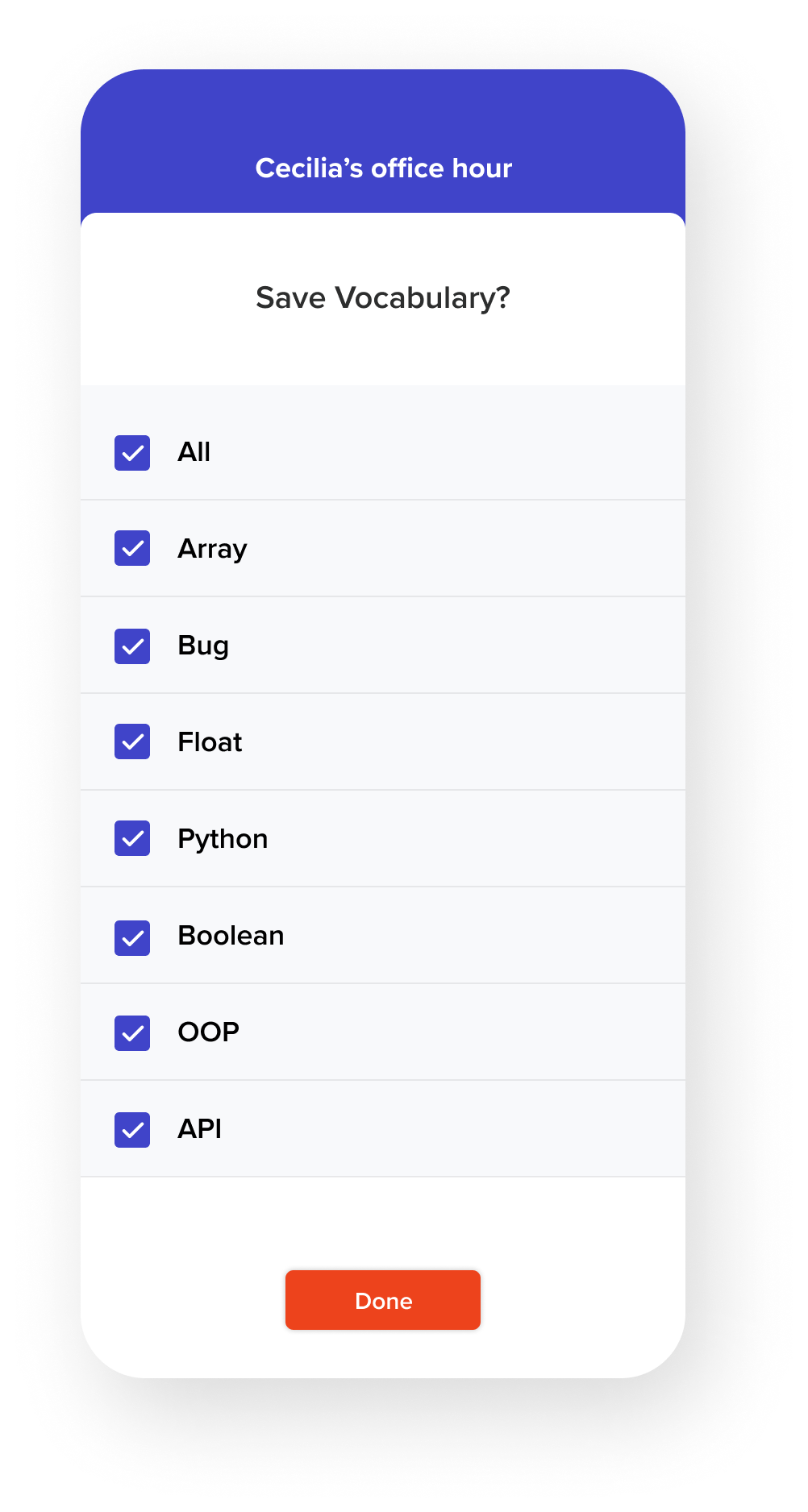

When interpreters input the topic and keywords they know before the session, the AI will generate a list of vocabulary that might occur in the session. They can remove words they already know from this list. This would help the AI identify the interpreter’s vocabulary level and decide which terms to highlight during the session.

2

For any session that lasts longer than an hour, interpreters work in pairs and switch roles every 20 minutes so they don’t omit information during interpreting due to the cognitive overload. The role of the "off" interpreter is to be another set of eyes/ears and assist the “on” interpreter by feeding them any information or terms they might have missed or signed incorrectly.

2

In instances where an "off" interpreter is using the app, they can switch to a vertical list view to show a live list of highlighted words in that session. This would assist the “off” interpreter in tracking the conversation and feeding information to the “on” interpreter.

3

Once interpreters learn a new term, there is no need for it to show up again in future sessions.

If I did it again...

When we designed this concept, we tried to cater the experience to the typical use case for an interpreter, where they are able to schedule a session and prepare beforehand. Currently the interaction model requires the interpreter to create a session, save it, click back into it, then start that session.

What should the interaction be like for interpreters who forgot or didn't have time to set up a session, and want to go right into using the real-time assistance?

Although this wouldn't be the typical use case for an interpreter, a good design should also work for the edge cases. I believe that interpreters would appreciate the freedom to go straight into in-session mode without having to create a session when they forgot to create a session. I can also see how interpreters might think that this is just a scheduling app without the main feature (in-session assistance) being available from the first screen they see.

Therefore, if I were to continue with this project, I would make a way for the user to go straight into in-session mode and allow them to fill in the job information afterwards.

Design Implications

In every project that has the potential to affect people, I believe that as designers we carry the responsibility to question and carefully consider the implications and potential problems/unintended outcomes that might arise from the design. These are a few questions I came up with about how SignSavvy might affect people.

- How might this negatively affect how interpreters learn on the job?

For example, will assistive technology prevent interpreters to rely on their own memory and skill?

Will they rely too much on the tech?

- How would DHH people feel that interpreters are using assistive tech?

Would DHH individuals have a say whether their interpreter uses this or not?

- Would using this technology be an indicator to others that the interpreter is a novice?

How might that change people's behavior?

- Will more people actually become interpreters with this technology available?

- Can this technology be expanded to other areas of communication or learning?

- How might this technology affect how humans interact with one other? (eye contact, dependency on mobile devices, etc.)

Now I do not know the answer to these questions. Obviously we weren't able to test on a large scale, but these questions are important to ask and think about. If this were to be developed and put out in the real world, we would need to find answers to these questions.

Takeaways

Before this project, I thought design research was valuable but only to a certain extent. Now I have learned that research should always happen if the time and resources are available. Each time we went out and interviewed or tested, we learned something new that changed our direction. Diligent research really helps understand not only the people who we are designing for, but also their thoughts, attitudes, and routines which drive how they interact with the world.

Another takeaway is that asking the right questions early on in the design process is crucial because those questions lead to valuable answers which acts as the foundation for the design to be built on.

The most valuable takeaway from this project is not coming up with a cool product or design. It is how much I was able to learn from diving into an unfamiliar topic which was deaf culture, sign language, and interpreters. I got to learn about how others navigate and interact with the world differently. I also grew to love interpreters not just for how well they handle their difficult job but more for their passion and dedication for accessibility and inclusion to DHH people. Because I learned more about deaf culture and DHH people, I have the perspective to look into their world and be a more knowledgable and empathetic designer and person.